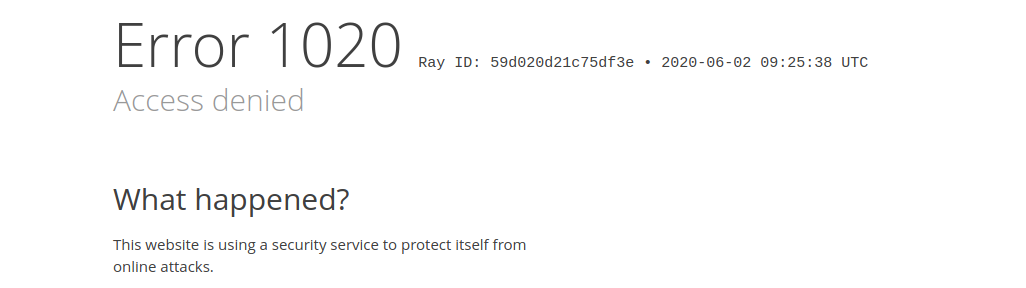

In the event that Cloudflare’s firewall rules detect dubious activity emanating from the client or browser utilized for scraping, an error 1020 will be triggered. This error message conveys the fact that the security service has implemented an automatic block on traffic originating from that particular IP address as a means of safeguarding your website from potential cyber attacks. This security mechanism is a critical component in ensuring the integrity and confidentiality of your website and associated data. With Cloudflare’s firewall rules adhering steadfastly to industry best practices and utilizing cutting-edge techniques to detect and impede malicious traffic, a 1020 error is indicative of the fact that Cloudflare is providing your website with comprehensive protection against potential cyber threats.

Why does error code 1020 appear?

Error 1020 may manifest itself while engaging in website crawling due to Cloudflare’s diligent identification of potentially dubious activities emanating from your client or browser. This predicament can ensue for a myriad of intricate reasons, encompassing the use of a non-browser user agent (typical of HTTP libraries), employing a headless browser not specifically tailored for web scraping endeavors, overly frequent requests, or presenting behaviors deviating from conventional user interactions, and so forth.

How to deal with such error?

Use a proxy to hide IP

One method worth trying is to use rotating proxies to hide your IP address for more stealthy web crawling purposes. A rotating proxy automatically switches your IP address, making it difficult for websites to detect and block your access, increasing your crawling success rate.

However, it is important to note that free proxies are often prone to glitches and can be easily detected by websites, resulting in your access being limited or blocked. Therefore, we recommend you to use ScrapingBypass, which provides a large number of residential IPs and supports automatic IP address rotation function to ensure your access is more stable and reliable.

In addition, there are some other proxy providers that can also provide rotating proxy services, but it should be noted that different proxy providers may have different quality and stability of IP addresses, so it needs to be based on your IP address. Select specific needs. No matter which proxy provider you choose, you need to make sure it is reliable and stable enough to keep your web crawling campaigns running smoothly.

Customize and Rotate User-Agent Headers

If you encounter error 1020 during web crawling, it is likely that Cloudflare is dissatisfied with your browser signature. In such cases, one viable solution is to customize the User-Agent header in your crawling code. The User-Agent header is a pivotal HTTP header in the crawling process, serving to identify the client type and version sending requests to the server.

Firewalls leverage this string to detect and block bots, rendering the rotation of user agents a valuable tactic for circumventing error 1020s. In order to ensure success in your web crawling endeavors, you can peruse our comprehensive list of the best user agents to find valid UA headers to incorporate into your crawling code.

Headless browser

A headless browser, a browser that runs without a GUI, can be used for web scraping because it can simulate human behavior and avoid triggering Cloudflare’s security features. Puppeteer, Playwright, and Selenium are popular choices for this job, providing powerful automation and great scalability.

However, there are some issues with headless browsers, the most prominent of which is their vulnerability to detection by Cloudflare. Due to their particular bot behavior, headless browsers are easily detected and subject to restrictions and bans accordingly.

To avoid this from happening, you can use some plugins to mask the properties of headless browsers, such as UnDetected ChromeDriver (a patched version of ChromeDriver), etc. These plugins can effectively hide your browser fingerprints and other characteristics from being detected by Cloudflare, helping you bypass anti-bot radar and web scraping.

Web Scraping API

ScrapingBypass stands as an impressive web scraping API tool, harnessing an amalgamation of sophisticated methodologies to effortlessly circumvent Cloudflare’s 1020 errors. Boasting unparalleled dependability in data extraction, this tool proves itself more than capable of fulfilling all your requirements.